artificial intelligence AI has entered the public consciousness with the emergence of powerful new AI chatbots and image generators, but the field has a long history dating back to the 19th century. The Dawn of ComputingGiven how important AI will be in transforming our lives over the next few years, it’s crucial to understand the roots of this rapidly evolving field. Here are 12 of the most important milestones in the history of AI.

1950 — Alan Turing’s groundbreaking AI paper

Notable British computer scientist Alan Turing Published paper title”Computing Machines and IntelligenceThis was one of the first detailed investigations into the question: “Can machines think?”

To answer this question, we must first tackle the difficult task of defining what constitutes a “machine” and what constitutes “thinking.” So he instead proposed a game: an observer watches a conversation between a machine and a human, and tries to determine which is the human. If they cannot determine with certainty, the machine wins the game. Although this did not prove that the machine “thinks,” the Turing test, as it became known, has since become a key benchmark for advances in AI.

1956 — Dartmouth Workshop

The origins of AI as a scientific field Dartmouth College Artificial Intelligence Summer Research ProjectA conference held at Dartmouth College in 1956, attended by a who’s who of influential computer scientists, including John McCarthy, Marvin Minsky, and Claude Shannon. The term “artificial intelligence” was first used at the conference, and the group spent nearly two months discussing ways for machines to simulate learning and intelligence. The conference marked the beginning of serious research into AI, laying the foundation for many of the breakthroughs that came in the decades that followed.

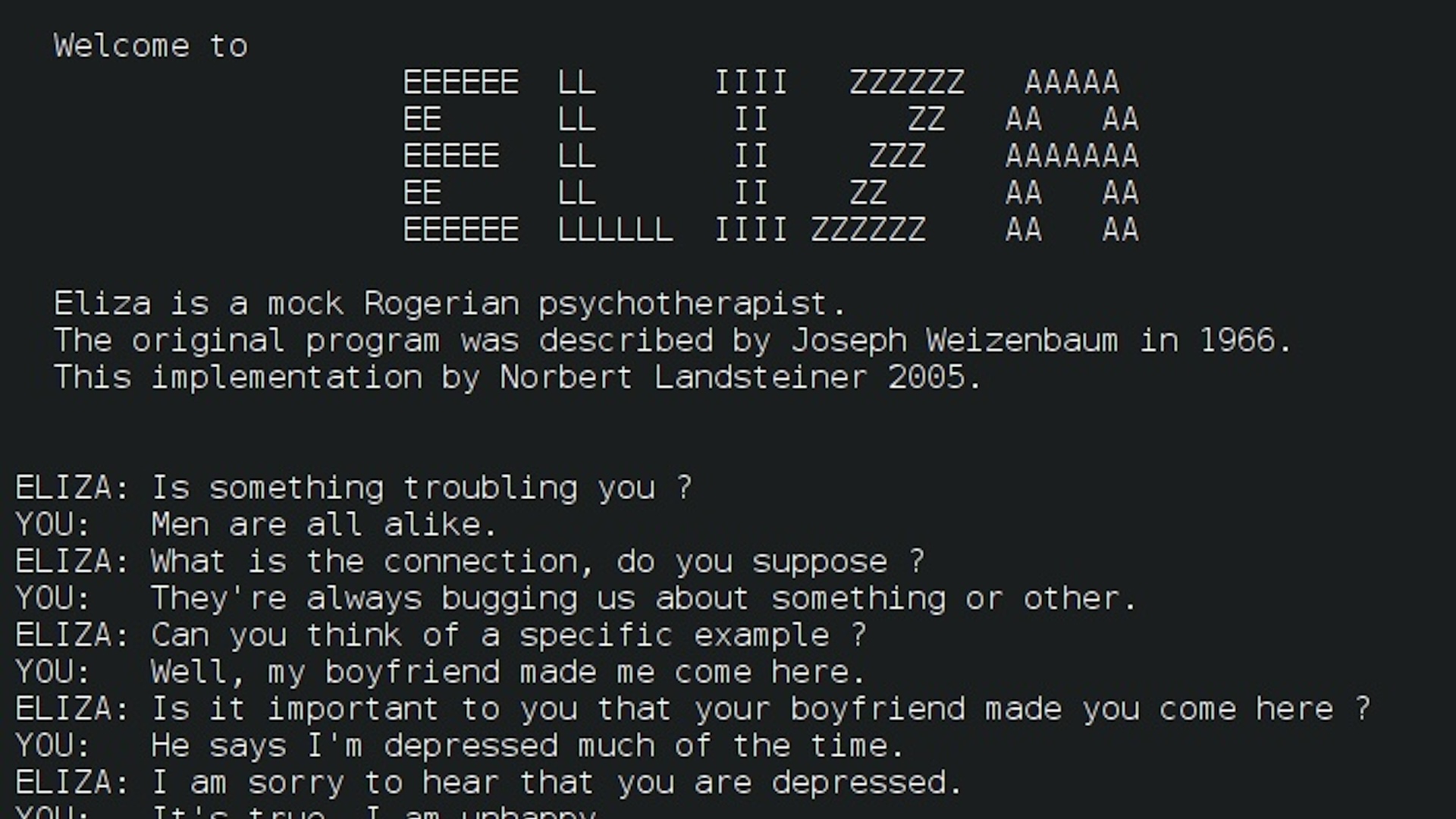

1966 — The first AI chatbot

MIT researcher Joseph Weizenbaum has created the first AI chatbot, ElizaThe underlying software was rudimentary, repeating canned responses based on keywords detected in the prompts. Still, when Weizenbaum programmed ELIZA to act as a psychotherapist, people were reportedly astonished at how persuasive the conversations were. This research spurred growth. Interest in natural language processingThis includes the Defense Advanced Research Projects Agency (DARPA), which provided significant funding for early AI research.

1974-1980 — The first “AI Winter”

It didn’t take long for the early enthusiasm for AI to begin to fade. The 1950s and 1960s were a fertile time for the field, but in that enthusiasm, leading experts made bold claims about what machines would be able to do in the near future. Frustration grew as the technology failed to meet those expectations. Highly critical report British mathematician James Lighthill’s work in this field led the British government to cut almost all funding for AI research. DARPA also drastically cut its funding during this time, creating what has been called the first “AI winter.”

1980 – The proliferation of “expert systems”

Despite disillusionment with AI in many quarters, research continued, and by the early 1980s the technology was beginning to attract the attention of the private sector. In 1980, researchers at Carnegie Mellon University An AI system called R1 The program was developed for Digital Equipment Corporation. It’s an “expert system,” an approach to AI that researchers have been experimenting with since the 1960s. These systems reason with logical rules over large databases of expert knowledge. The program saved the company millions of dollars a year and launched a boom in industrial adoption of expert systems.

1986 — Deep Learning Basics

Most research to date has focused on “symbolic” AI, relying on handcrafted logic and knowledge databases. But since the field’s inception, there has also been competing work on brain-inspired “connectionist” approaches, which continued quietly in the background, only surfacing in the 1980s. These techniques involved training “artificial neural networks” with data to learn the rules, rather than manually programming the systems. In theory, this should have resulted in more flexible AI, not bound by the preconceptions of its creators, but training neural networks proved difficult. In 1986, Geoffrey Hinton, who would later be called one of the “godfathers of deep learning”, paper It popularizes “backpropagation,” a training technique that underpins most AI systems today.

1987-1993 — 2nd AI Winter Conference

Following the experience of the 1970s, Minsky and AI researcher Roger Shank warned that AI hype was reaching unsustainable levels and the field was in danger of again retreating: They coined the term “AI winter.” panel discussion They sounded the alarm at a meeting of the Association for the Advancement of Artificial Intelligence in 1984. Their warning proved prescient, as the limitations of expert systems and their dedicated AI hardware began to become apparent in the late 1980s. Industry spending on AI fell dramatically, and most start-up AI companies went out of business.

1997 — Deep Blue defeats Garry Kasparov

Despite booms and busts, AI research progressed steadily through the 1990s with little public attention, until 1997, when IBM’s expert system Deep Blue defeated the world chess champion Garry Kasparov. Six-game seriesFitness at complex games has long been seen by AI researchers as a key indicator of progress, so beating the world’s best human player was seen as a major milestone and made headlines around the world.

2012 – AlexNet kicks off the deep learning era

Despite much academic research, neural networks were considered impractical for real-world applications. To be useful, they needed many layers of neurons, and implementing large networks on traditional computer hardware was inefficient. In 2012, Hinton’s doctoral student Alex Krizhevsky won an image recognition competition by a large margin with a deep learning model. Alex NetThe secret was using specialized chips called graphics processing units (GPUs) that could run much deeper networks efficiently. This was the foundation of the deep learning revolution that has powered most AI advances since.

2016 – AlphaGo beats Lee Sedol

While AI had already made chess obsolete, the much more complex Chinese board game Go remained a challenge. But in 2016, Google DeepMind AlphaGo AlphaGo defeated Lee Sedol, one of the best Go players in the world, in a five-game series. This result led to growing expectations for advances in AI, as experts believed such a feat was still years away from being achieved. This is in part due to the versatility of the algorithm underlying AlphaGo, which relies on an approach called reinforcement learning, in which AI systems effectively learn through trial and error. DeepMind later extended and refined this approach, developing a Alpha ZeroYou can learn how to play different games on your own.

2017 — Invention of the Transformer architecture

Despite huge advances in computer vision and gameplay, deep learning had been slow to make progress in language tasks. Then, in 2017, Google researchers introduced a new neural network architecture called “Transformers”, which was able to take in vast amounts of data and create connections between distant data points. This proved particularly useful for the complex task of language modeling, allowing for the creation of AI that could handle a variety of tasks simultaneously, including translation, text generation, and document summarization. All of today’s major AI models rely on this architecture, including OpenAI’s image generator. DALL-Eand Google DeepMind’s groundbreaking protein folding model Alphafold 2.

2022 – Launch of ChatGPT

On November 30, 2022, OpenAI released a chatbot equipped with the GPT-3 large-scale language model.Chat GPTSince its launch, the tool has become a worldwide sensation, attracting more than one million users in less than a week and reaching 100 million the following month. It was the first time that the public had been able to experience the latest AI models, and most were astounded. The service has been credited with sparking an AI boom, which has seen billions of dollars invested in the sector and spawned a host of imitators from big tech companies and startups alike. It has also sparked concerns about the pace of AI progress, Open Letter Prominent tech leaders have called for a pause on AI research to allow time to evaluate the technology’s impact.