We all know that companies, especially hyperscalers and cloud builders, and now large enterprises looking to leverage generative AI, are spending huge amounts of money on AI accelerators and related chips to create AI training and inference clusters.

But try to calculate that amount. Try. The numbers are all over the place. And not just because it’s hard to draw the line separating what constitutes an AI chip from the systems that surround it. One of the problems with estimating the size of the AI market is that no one actually knows what happens to the servers after they’re built and sold, or what they’re being used for. For example, how do we know how much of a machine with a GPU is actually tackling AI work or HPC work?

In December, as we were gearing up for the holidays, I took a closer look at IDC’s GenAI spending forecast, which was interesting because it categorizes GenAI workloads separately from other types of AI and describes the hardware, software, and services spending to build GenAI systems. Of course, I also mentioned AMD CEO Lisa Su’s original and revised forecasts for 2022 and 2023 for all kinds of data center AI accelerators, including not just GPUs but all other chips.

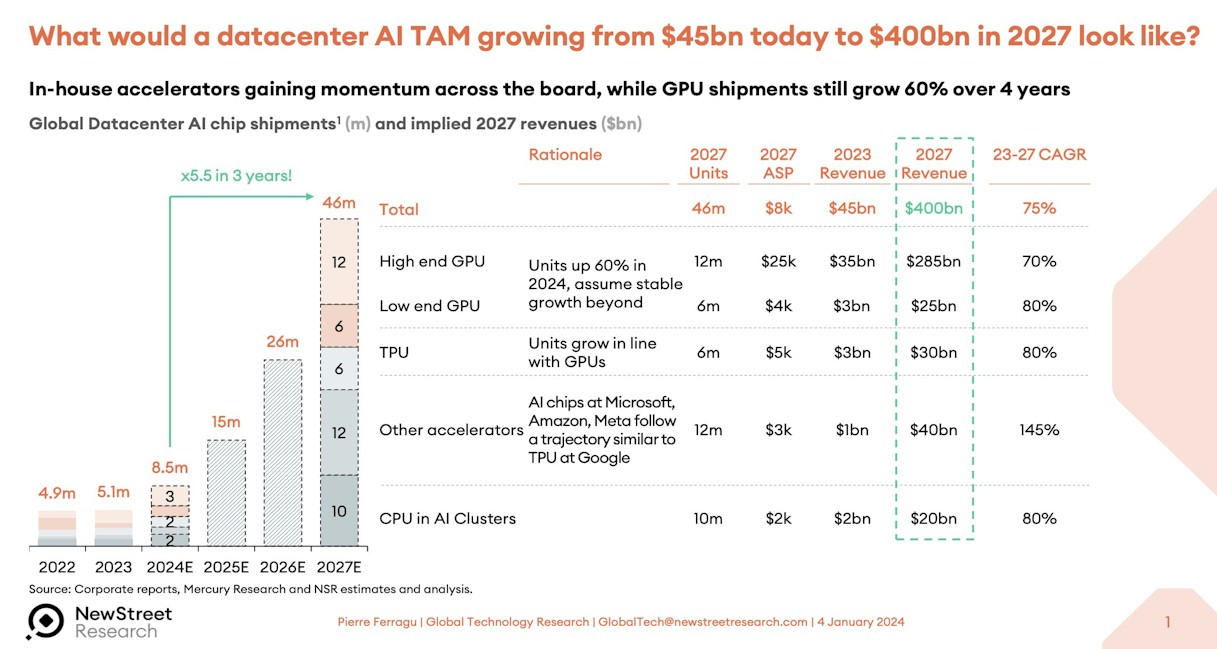

Just to be clear, Su said the data center AI accelerator market size will be $30 billion in 2023, growing at a compound annual growth rate of about 50% to more than $150 billion by the end of 2027. However, a year later, with the launch of the “Antares” Instinct MI300 series GPU in December and the GenAI boom exploding, Su said AMD estimates the data center AI accelerator market size to be $45 billion in 2023, growing at a compound annual growth rate of more than 70% to more than $400 billion by 2027.

This is for accelerators only, not servers, switches, storage or software.

Pierre Ferragu of New Street Research, who does some very good work in the technology space, We look at how this $400 billion data center accelerator, TAM, could collapse.They tweeted:

This is still a very large number, and assumes that AI server, storage, and switching revenue will reach approximately $1 billion at the end of the TAM forecast period.

Coming back to reality for a bit, as we look ahead to 2024, I got hold of some GPU revenue forecasts from Aaron Lakers, Managing Director and Technology Analyst at Wells Fargo Equity Research, and had a little fun playing around with a spreadsheet. The model covers data center GPU revenue from 2015-2022, projects through 2023 (which hadn’t yet finished when the forecast was made), and extends through 2027. The Wells Fargo model predates the revised forecasts AMD has made in recent months, which call for $4 billion in GPU revenue in 2024 (we think it will be $5 billion).

Either way, Wells Fargo’s model projects GPU sales in 2023 at $37.3 billion, with annual shipments of 5.49 million units. Shipments have nearly doubled, and include all kinds of GPUs, not just high-end GPUs. GPU revenue has increased 3.7 times. Data center GPU shipments in 2024 are projected to be 6.85 million units, up 24.9%, and revenues at $48.7 billion, up 28%. The 2027 forecast projects GPU shipments at 13.51 million units, with data center GPU revenues at $95.3 billion. In this model, Nvidia’s revenue market share is 98% in 2023, dropping to 87% in 2027.

So here we go. Gartner and IDC recently released statistics and forecasts on AI semiconductor sales. It’s worth skimming through these to see where AI chips are now and where they’ll be in the next few years. The reports published by these companies are always short on data (because they have to make a living too), but they usually have something valuable to offer that we can use.

Let’s start with Gartner. About a year ago, Gartner released their market research on AI semiconductor sales in 2022, with projections for 2023 and 2027. Then a few weeks ago, they released revised forecasts with sales for 2023 and projections for 2024 and 2028. The market research in the second report also included some statistics, which we’ve added to create the following table:

![]()

Compute electronics is presumably a category that includes PCs and smartphones, but even Alan Priestly, vice president and analyst at Gartner who created these models, acknowledges that every PC chip sold by 2026 will be an AI PC chip, as every laptop and desktop CPU will include some sort of neural network processor.

AI chips that speed up servers are what we’re looking at here. Next-generation platformAnd revenue from these chips (estimated not including the value of the HBM, GDDR, or DDR memory that comes with them) is expected to be $14 billion in 2023, growing 50% to $21 billion in 2024. But the compound annual growth rate of server-oriented AI accelerators is expected to be only about 12% between 2024 and 2028, with sales reaching $32.8 billion. Priestley says custom AI accelerators such as TPUs and Amazon Web Services’ Trainium and Inferentia chips (to name just two) will generate just $400 million in revenue in 2023 and $4.2 billion in 2028.

If the AI chip represents half the value of the compute engine, and the compute engine represents half the cost of the system, then adding up these relatively small numbers, the revenue potential from an AI system in the data center could be quite significant. Again, this depends on where and how Gartner draws the line.

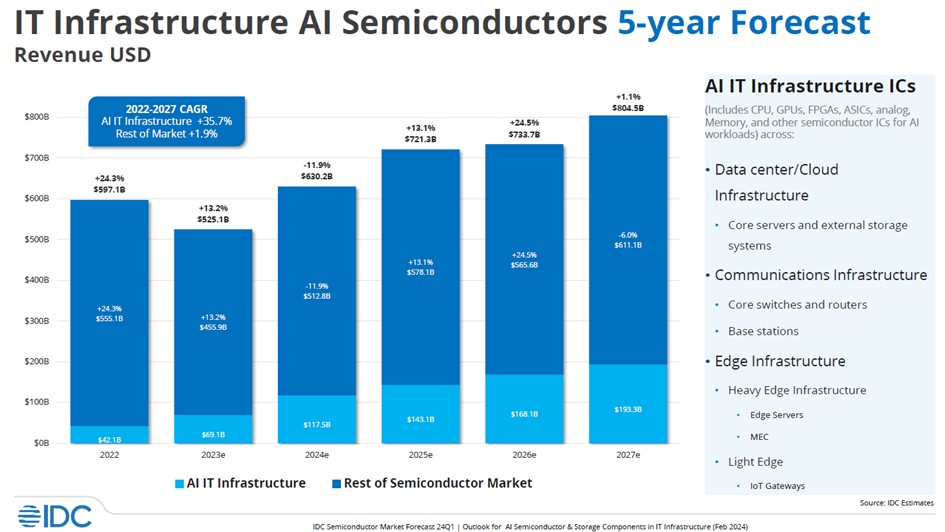

Now, let’s look at what IDC thinks about the AI semiconductor and AI server markets. They published this interesting chart a few weeks ago:

In this chart, IDC compiles all revenue from CPUs, GPUs, FPGAs, custom ASICs, analog devices, memory, and other chips used in data centers and edge environments. It then excludes revenue from compute, storage, switches, and other devices that apply to AI training and AI inference systems. This is not the value of the entire system, but the value of all the chips in the system. So it doesn’t include chassis, power supplies, cooling, motherboards, riser cards, racks, system software, etc. This chart has actual data for 2022, and as you can see, data for 2023-2027 is still being estimated.

This chart is a little hard to read, so click to expand if your eyes are strained. In this IDC analysis, the AI portion of the semiconductor market will grow from $42.1 billion in 2022 to an estimated $69.1 billion in 2023, a growth rate of 64.1% between 2022 and 2023. This year, IDC expects AI chip revenue (which means not just XPU sales, but all the chip content that goes into AI systems in the data center and at the edge) to grow 70% to $117.5 billion. Running the numbers from 2022 to 2027, IDC estimates that total bill-of-materials revenue for AI chip content in data centers and AI systems will grow at a compound annual growth rate of 28.9% to $193.3 billion in 2027.

The blog post on which this graph is based was published at the end of May and is based on reporting done in February, so please take that time lag into account.

In that post, IDC added some server revenue figures and extracted AI servers separately from servers used for other workloads. We did some spreadsheet crunching with the raw IDC server numbers to get an idea of AI server spending for October 2023, but here’s the actual data.

IDC estimates that the number of servers sold worldwide in 2023 will fall 19.4% to just under 12 million, but because of the significantly higher average selling price of AI servers (we estimate it to be 45 to 55 times higher than typical servers supporting boring infrastructure applications), AI server revenue (based on last year’s estimate of $9.8 billion in AI server revenue in 2022) will increase 3.2 times to $31.3 billion, accounting for 23% of the market. IDC projects that AI server sales will reach $49.1 billion by 2027. IDC has not released an updated forecast for server revenue for 2027, but as of the end of 2023, that figure was $189.14 billion, as shown here.

Incidentally, as IDC segments the server market, machines that run AI on the CPU using native matrix or vector engines on the CPU are considered unaccelerated and therefore not considered “AI servers” in our jargon.

Either way, we believe the 2027 AI server revenue projections are too low, or the 2027 overall server revenue projections are too high, or both. We believe that AI servers with some acceleration will account for just under half of revenue by 2027, and we assume that data centers will be doing a lot of accelerated and generative AI.

But it’s certainly a hunch, and we’ll be keeping an eye on this.