Researchers at the University of Minnesota have introduced a hardware innovation called CRAM, which promises to reduce AI energy usage by up to 2,500 times by processing data in memory, greatly improving AI efficiency.

The device has the potential to reduce the energy consumption of artificial intelligence by at least 1,000 times.

Engineering researchers at the University of Minnesota, Twin Cities have developed an advanced hardware device that can reduce energy consumption. artificial intelligence (AI) to improve computing applications by at least 1,000 times.

This study npj Non-Traditional ComputingThe study was published in the peer-reviewed scientific journal Nature and the researchers hold several patents for the technology used in the device.

As demand for AI applications grows, researchers have been exploring ways to keep costs low and create more energy-efficient processes while keeping performance high. Typically, machines or artificial intelligence processes consume large amounts of power and energy to transfer data between logic (where information is processed in the system) and memory (where data is stored).

Introducing CRAM Technology

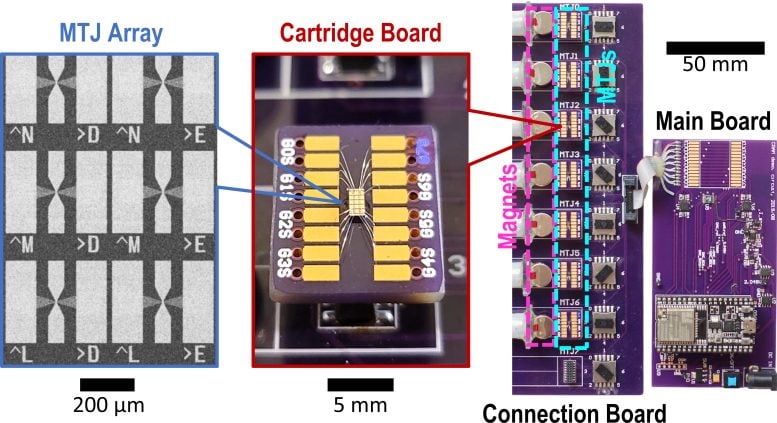

A team of researchers from the University of Minnesota College of Science and Engineering has demonstrated a new model called computational random access memory (CRAM) in which data never leaves the memory.

“This work is the first experimental demonstration of a CRAM that can process data entirely within its memory array, without ever leaving the grid where the computer stores the information,” said Jan Lub, a postdoctoral researcher in the University of Minnesota’s Department of Electrical and Computer Engineering and lead author on the paper.

Custom hardware device plans to make artificial intelligence more energy efficient. Credit: University of Minnesota, Twin Cities

The International Energy Agency (IEA) Global Energy Consumption Forecast In March 2024, the report predicted that AI energy consumption will double from 460 terawatt-hours (TWh) in 2022 to 1,000 TWh in 2026, roughly equivalent to the electricity consumption of the entire country of Japan.

According to the authors of the new paper, CRAM-based Machine Learning Inference accelerators are estimated to achieve approximately 1,000x improvements. Other examples have shown energy savings of 2,500x and 1,700x compared to traditional methods.

Evolution of research

This research has been conducted for over 20 years.

“Twenty years ago, the original concept of using memory cells directly for computing seemed crazy,” said Jianping Wang, lead author of the paper and the McKnight Emeritus and Robert F. Hartman Professor of Electrical and Computer Engineering at the University of Minnesota.

“Thanks to an ever-evolving group of students since 2003 and a truly interdisciplinary team of faculty built at the University of Minnesota, from physics, materials science and engineering, computer science and engineering to modeling and benchmarking to hardware creation, we have been able to get positive results and now demonstrate that this kind of technique is feasible and ready to be built into technology,” Wang said.

The research is part of a consistent, long-term effort by Wang and his collaborators that builds on their groundbreaking, patented research into magnetic tunnel junction (MTJ) devices, nanostructured devices used to improve hard drives, sensors and other microelectronic systems, including magnetic random access memory (MRAM) used in embedded systems such as microcontrollers and smartwatches.

The CRAM architecture enables true memory-in-memory computation, breaking the barrier between computation and memory that was a bottleneck in the traditional von Neumann architecture, the theoretical design for stored-program computers that is the basis of nearly all modern computers.

“As an extremely energy-efficient, digital-based, in-memory computing substrate, CRAM is extremely flexible in that computations can be performed anywhere in the memory array, so we can reconfigure CRAM to best meet the performance needs of different AI algorithms,” said Ulya Karpuzcu, an expert in computing architecture, co-author of the paper and an associate professor in the Department of Electrical and Computer Engineering at the University of Minnesota. “This is more energy efficient than today’s traditional building blocks for AI systems.”

CRAM efficiently uses an array structure to perform calculations directly within the memory cells, eliminating the need for slow, energy-intensive data transfers, Karpuzcu explained.

The most efficient short-term random access memory (RAM) devices use four or five transistors to code a 1 or 0, but MTJs, which are spintronic devices, can perform the same function faster, using a fraction of the energy, and can withstand harsh environments. Spintronic devices use the spin of electrons, rather than electric charge, to store data, making them a more efficient alternative to traditional transistor-based chips.

Now the team plans to work with semiconductor industry leaders, including in Minnesota, to conduct large-scale demonstrations and build hardware that will advance AI capabilities.

Reference: Yang Lv, Brandon R. Zink, Robert P. Bloom, Hüsrev Cılasun, Pravin Khanal, Salonik Resch, Zamshed Chowdhury, Ali Habiboglu, Weigang Wang, Sachin S. Sapatnekar, Ulya Karpuzcu, Jian-Ping Wang, “Experimental Demonstration of Magnetic Tunnel Junction-Based Computational Random Access Memory,” July 25, 2024, npj Non-Traditional Computing.

Publication date: 10.1038/s44335-024-00003-3

In addition to Lv, Wang, and Karpuzcu, the team included University of Minnesota Department of Electrical and Computer Engineering researchers Robert Bloom and Husrev Cilasun, McKnight Distinguished Professor and Robert and Marjorie Henle Chair Sachin Sapatnekar, former postdoctoral researchers Brandon Zink, Zamshed Chowdhury, and Salonik Resch, as well as University of Arizona researchers Pravin Khanal, Ali Habiboglu, and professor Weigang Wang.

The research was supported by the Defense Advanced Research Projects Agency (DARPA).DARPAHe has conducted research involving nanodevice patterning in collaboration with NASA/Atlantic, the National Institute of Standards and Technology (NIST), the National Science Foundation (NSF), and Cisco Systems, Inc., and has performed simulation/computational work in collaboration with the Minnesota Supercomputing Institute at the University of Minnesota.