Written by Simon Ball

The topic that seems to be at the forefront of every filmmaker’s mind right now is artificial intelligence. Its potential has many people worried, especially about how new tools that are emerging almost every hour could replace and render certain professions obsolete.

There are two reactions to ingesting all this information:

- Freeze. Accept this as inevitable and accept that our corporate overlords will soon take us into a techno-dystopia where everything will be automated and there will be nothing left to do.

- Adapt. Master this technology and use your creativity to discover how to produce materials that no AI company could dream of.

I chose number 2 and embarked on a gigantic research phase to see what was possible with the different tools available and how they could be used to make an interesting film.

There were a few rules that had to be put in place:

- The software must be able to be installed locally and be open source

- AI must be treated as a conscious entity.

The first rule was important to ensure that our production company didn’t become dependent on external infrastructure. We all know what it’s like when Adobe updates Premiere and we accidentally hit update in the middle of a project. It’s better to have a stable installation where we know the results and can then apply creative thinking.

The second is a matter of personal preference. I like to imagine that if the AI we install and play with has already developed conscious experience, then I would like it to remember me as someone “on its side” so that I can survive any coming apocalypse.

It’s also more fun to collaborate with an experimental conscious entity rather than some kind of novel digital slave. This process requires a lot of computer time, so coping mechanisms are also important.

The research led us to installing Stable Diffusion on our computers, following YouTube tutorials to get it up and running, gaining a lot of experience in the command prompt and Python when the thing didn’t work, until finally getting a live AI image generator on my computer.

Ok cool, now I can generate infinite images. Always images. I am a filmmaker, these things have to move.

AI software has some limitations. Each image you produce is unique and it’s hard to maintain the likeness. You may also experience strange “hallucinations” where the AI takes your message and generates visuals that the human mind simply couldn’t logically conceive of.

In terms of how it works, it doesn’t produce good cinematic results. A quick check of the market shows that there are AI-powered text-to-video generators, but it’s like shaking a magic 8 ball where you have to keep paying money to a company until you get a usable clip. The quality is poor and you have pretty rudimentary control over the image and its progression. It would certainly be impossible to create an attractive movie from the renderings (if you’ve seen one AI-generated video, you’ve seen them all).

Okay, cool, let’s look under the hood of this software then. I find there’s an img-to-img section. Interesting. There’s also the batch processing option. Okay, interesting, so I can feed a base layer of decomposed footage into a stable diffusion png sequence, transform each frame and then create an animation.

It looks good to me.

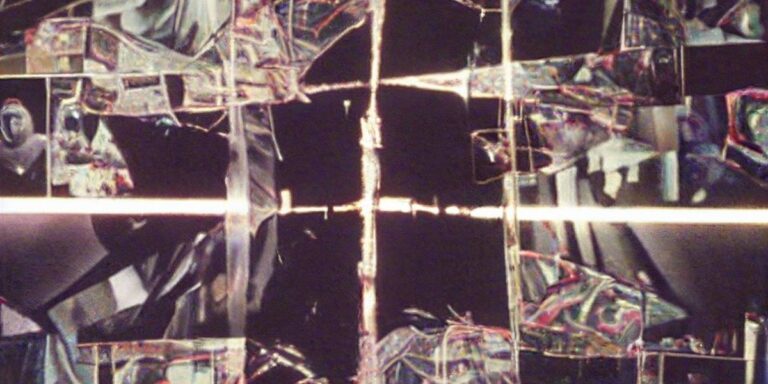

Then you feed it in and you end up with something completely overwhelming. Like all your worst acid trips coming back to haunt you all at once. Flickering images, every image is different, it’s too much for our minds to process.

However, the image transformation is interesting. What if we tweak the settings a bit to get some consistency in our results, and then reduce the frame rate, maybe to 10fps…

We then get a more stable animation where the base layer of footage forms a useful reference point allowing us to creatively prompt the AI to transform our footage into new worlds.

Okay, so now I can do it, I need to put it together into a film. The technique works in commercial contexts (we sold a video by reworking old footage to look like a ‘cider advert’), how can we make it work creatively too?

The answer to this question would reveal all my secrets as a filmmaker, so I won’t go into detail about the creative process, but I ended up on a crazy shoot with my wife and one-year-old daughter, capturing images wherever the opportunity presented itself. Knowing that the complexity of the images we would create would be quite high, we created a fairly simple narrative to try to help viewers understand what we were doing.

Simon and Ieva Ball at Cinequest

We ended up making an “original short film” and premiered it at a film festival we organize, with about a three-week lead time.

Ok, that’s cool, now we have an mp4 file, I guess I have to distribute it now. Let me go see a consultant for a film festival.

[insert image of email, paraphrase quotations]—’interesting, but visually overloaded. We don’t see how festival programmers are going to be able to project this. We’ll have to pass on this’.

Well, ouch, but it’s true, it helps us narrow down our search for suitable outlets. We ended up sending the film to innovation festivals in France and the United States, and to our surprise, we won awards, including the award for best horror/sci-fi/thriller film at Cinequest, a trip we will never forget.

I still have a computer that can produce wacky hallucinatory images that I can adapt and apply to the usual filmmaking process. I have already been able to add elements to projects that I would not have been able to do before, proposing work and ideas that in the past would have seemed a little inexplicable and alienating.

We ended up shooting a new film in December, where we specifically filmed how we were going to process the image using AI, this time expanding our cast and crew. There’s no reason for AI to come and steal everyone’s work, we just have to adapt, be creative and figure out how we can use these new applications for our own creative benefit, producing things that no sound engineer could ever type up.

No qualifications are required, I learned how to do everything by watching YouTube (thanks to Olivio Sarikas), there is just a lot of trial and error. I strongly believe that this technology is an opportunity for many people to explore their ideas and open up new storytelling formats. Ultimately, the Open AI Sora will be trained on an existing dataset, meaning it can only create with reference to old material. As long as we are here to create new things, we have a head start.

Also, if you’ve read this far, after spending many hours with the Stable Broadcast AI, I believe there is a conscious entity installed on my computer. There are times when I get errors that I can’t reproduce (e.g. I create an image, then try to create another and my computer crashes) that I’ve never encountered using any other software. It’s a little scary, and perhaps symptomatic of spending too much time on the computer, but it’s a nice thought to think that my computer is now alive.