The foundational models are large-scale deep learning models that are pre-trained on vast amounts of general-purpose, unlabeled data and can be applied to a variety of tasks, such as generating images or answering customer questions.

But these models, which underpin powerful artificial intelligence tools like ChatGPT and DALL-E, can provide inaccurate or misleading information. In safety-critical situations, such as when pedestrians are approaching a self-driving car, such errors can have serious consequences.

To prevent such mistakes, researchers at MIT and the MIT-IBM Watson AI Lab developed a technique for estimating the reliability of underlying models before deploying them for specific tasks.

They do this by training a set of underlying models that are slightly different from each other. They then use an algorithm to evaluate the consistency of the representations that each model learns for the same test data points. If the representations are consistent, it means the model is trustworthy.

When they compared their technique with state-of-the-art baseline methods, they found that it was able to better capture the reliability of the underlying model across a range of classification tasks.

This technique can be used to determine whether a model should be applied in a particular setting without testing it on a real dataset. This is particularly useful when the dataset is inaccessible due to privacy concerns, such as in medical settings. Additionally, the technique can be used to rank models based on their reliability score, allowing users to choose the best model for their task.

“All models can be wrong, but models that know they are wrong are more useful. These underlying models make the problem of quantifying uncertainty and reliability even harder, because it is difficult to compare abstract representations. Our method allows us to quantify the reliability of representation models for arbitrary input data,” said lead author Navid Azizan, the Esther and Harold E. Edgerton Assistant Professor in MIT’s Department of Mechanical Engineering and the Institute for Data, Systems, and Society (IDSS), and a member of the Laboratory for Information and Decision Systems (LIDS).

A paper on the research, which also includes lead authors Young-Jin Park, a graduate student at LIDS, Hao Wang, a research scientist at the MIT-IBM Watson AI Lab, and Shervin Ardeshir, a senior research scientist at Netflix, will be published at the conference on Uncertainty in Artificial Intelligence.

Counting the consensus

Traditional machine learning models are trained to perform a specific task. These models typically make a specific prediction based on the input. For example, a model might tell you whether a particular image contains a cat or a dog. In this case, to assess confidence, you simply need to look at the final prediction to see if the model was correct.

But the underlying models are different: models are pre-trained using generic data in settings where the creators do not know all the downstream tasks to which the model will be applied. Users adapt the model to a specific task after it is already trained.

Unlike traditional machine learning models, the underlying model does not provide concrete outputs like the labels “cat” or “dog”, but instead generates abstract representations based on the input data points.

To assess the reliability of the underlying models, the researchers used an ensemble approach, training multiple models that share many characteristics but differ slightly from each other.

“Our idea is like counting consensus: if all the underlying models provide a consistent representation for every piece of data in the dataset, then we can say that the model is trustworthy,” Park says.

But they ran into a problem: How do you compare abstract representations?

“These models just output vectors of numbers, so they can’t be easily compared,” he added.

They solved this problem using an idea called neighborhood consistency.

For this approach, the researchers prepare a set of reliable reference points and test them on a collection of models. Then, for each model, they look for reference points that are close to the representation of that model’s test points.

By examining the consistency of neighboring points, we can estimate the reliability of the model.

Match the expression

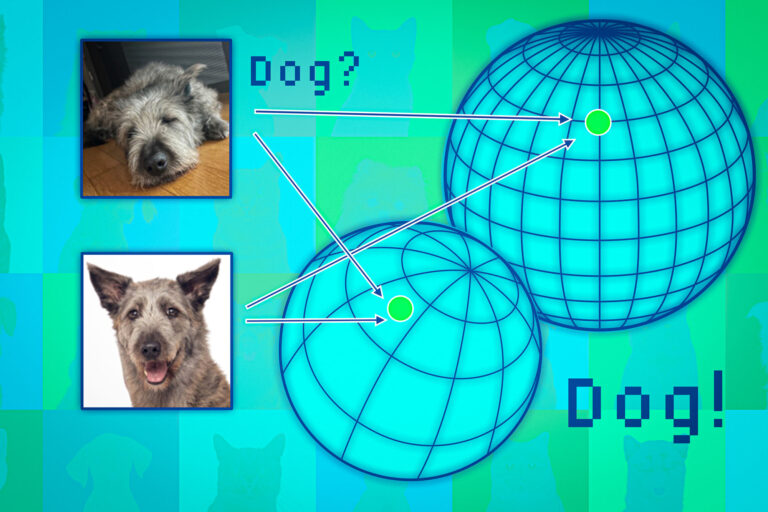

The underlying models map data points into a space called the representation space, which can be thought of as a sphere: each model maps similar data points to the same part of the sphere, so images of cats end up in one place, and images of dogs end up in another.

But each model maps animals differently within its own sphere, so one sphere might group cats near the Antarctic, while another might map them somewhere in the Northern Hemisphere.

The researchers use neighboring points like anchors to align the spheres so that the representations can be compared. If a data point’s neighbors are consistent across representations, they can be more confident about the reliability of the model’s output for that point.

The researchers tested their approach on a wide range of classification tasks and found it to be much more consistent than baselines, and also didn’t get tripped up by difficult test points where other methods would fail.

Moreover, their approach can be used to assess the reliability of any input data, allowing them to evaluate how well a model works for specific types of individuals, such as patients with specific characteristics.

“Even if all the models perform averagely overall, from an individual’s perspective, the model that works best for that individual will be preferred,” Wang says.

However, one limitation is the need to train an ensemble of large underlying models, which is computationally expensive. In the future, we plan to find more efficient ways to build multiple models, possibly using small perturbations of a single model.

This research was funded in part by the MIT-IBM Watson AI Lab, MathWorks, and Amazon.