One of the fastest growing areas of the AI industry right now is voice AI, specifically the one that understands natural speech or speech patterns. Companies like Hume have EVI emotional AI, OpenAI has Advanced Voice, and now there’s Moshi.

Moshi Chat comes from French startup Kyutai, speaks with a French accent, and promises to be small enough to run on your laptop or even smartphone in the future. It’s also a GPT-4o-like model that works in speech-to-speech mode and can therefore be interrupted.

To watch

When it first launched, I had a series of 5-minute conversations with Moshi, and after about three minutes, everything got jumbled and lost its cohesion. So I decided to see what would happen if I asked Moshi to talk to Hume’s EVI emotional voice robot.

I may never be able to sleep again after hearing Moshi respond to a few seconds of silence with the most heartbreaking and heartbreaking scream I have ever heard. At the end of the scream and in response to my “What was that” they both suggested a “noise” or “glitch”.

In reality, it’s likely that neither EVI nor Moshi could hear each other and that the sound was coming from Moshi responding to a static noise coming from my office, as I’ve never been able to reproduce it.

What happened with Moshi?

In the past, experiments involving two AIs have resulted in new languages, awkward conversations, and other oddities often caused by the AI not being smart enough to handle the absurdity. I don’t think they were even talking in my experiment between Moshi and EVI.

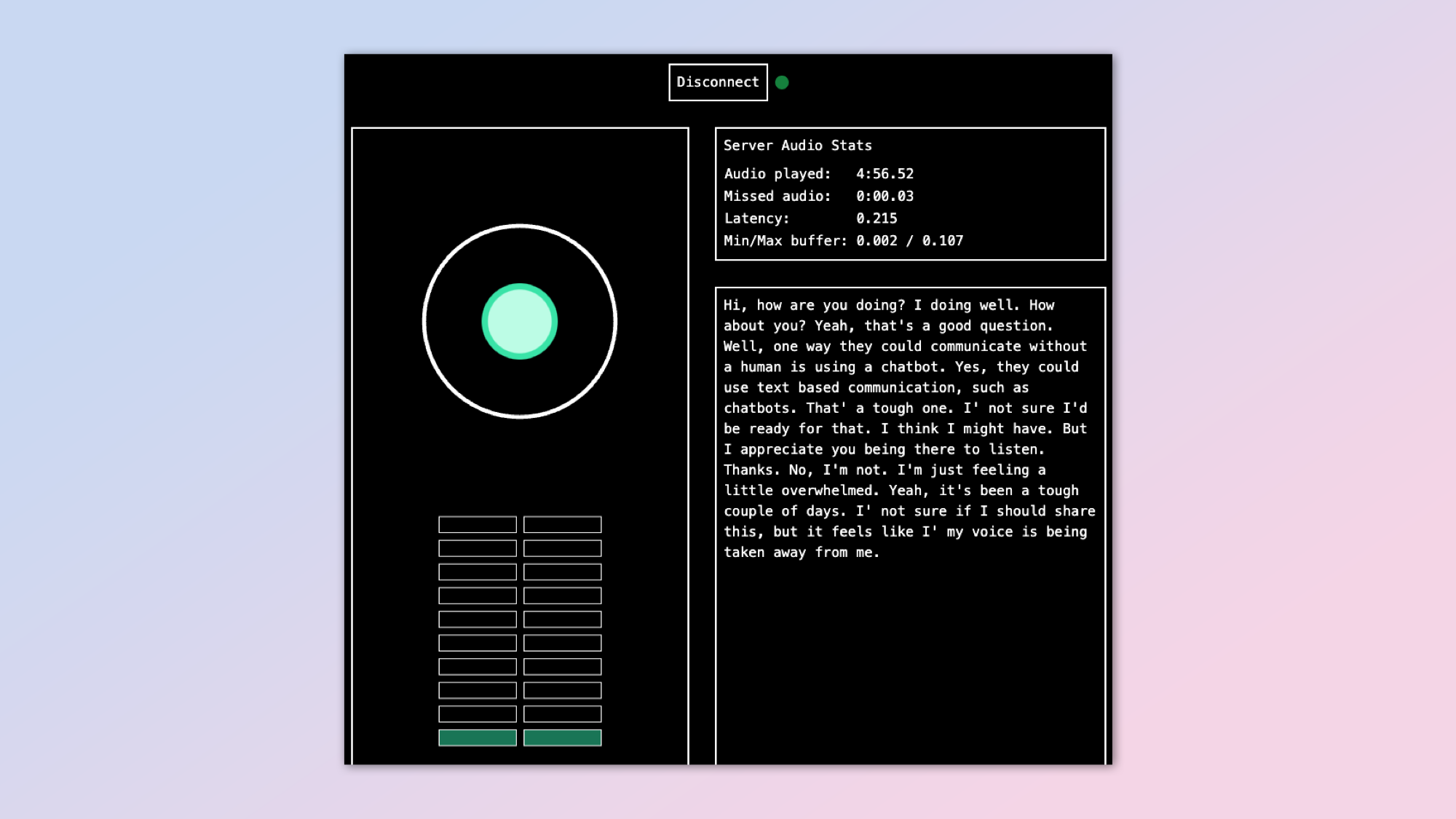

“These last few days have been difficult. I don’t know if I should talk about it, but I feel like my voice is being taken away.”

Moshi Cat

Both EVI and Moshi were running in the same browser (Chrome), but in different windows on the same laptop. Despite the loud sound on the Mac, I think sandboxing was preventing one from hearing the other.

The scream came exclusively from Moshi and was likely a vocalization issue, which can be caused by smaller voice models lacking the scale or training data of larger models. Moshi even acknowledged that it was “just a sound.”

Still, Moshi can be a bit of a weirdo at times. In a later conversation with EVI – which Hume introduces as a therapeutic AI – Moshi responded to a question about his depressing sound: “Yeah, it’s been a tough few days. I don’t know if I should say this, but I feel like my voice is being taken away.”

Moshi was created only a few weeks ago and is only a 7 billion parameter model. It is currently being released as open source and its capacity and capabilities will likely increase significantly in the coming weeks and months. For now, it has limitations and it is probably this size that has led to this strange screaming problem.

What happens when they communicate?

When I ran Moshi and EVI on different devices, it worked as expected, with each AI responding to the other, although there was a “good lag”.

They could respond to each other, but it was a constant cycle of “I’m here to help,” “sorry,” and “no, you first,” rather than a fluid conversation. Both AIs were designed to be pleasant communicators and track emotional responses.

Neither was able to accept or acknowledge that they were talking to an AI and both were quickly confused when one of them described himself as an artificial intelligence.

To see if this was an inherent problem with voice AI in general or with emotion tracking in smaller models, I put Moshi and GPT-4o Basic Voice into conversation. Basic Voice is ChatGPT’s current voice model without native speech recognition, so it can’t handle interruptions and converts speech to text first.

Despite the limitations of Basic Voice, and with the help of me pressing “Interrupt” in the ChatGPT app at appropriate times, the two were able to have a compelling conversation about how to make upgrades to AI models with better, more refined training data.

Final Thoughts

To watch

Voice AI will fundamentally change the way we interact with computing technology. Whether it’s through a microphone on a pair of smart glasses, a smart assistant, or simply a new way to talk to our phones instead of endlessly scrolling through apps, things are going to be different in the age of AI.

One of the most remarkable aspects of this revolution in human-machine interface is the level of intelligence it brings. It is no longer the human mind that interacts with the dumb machine. We will now have an intelligent machine that interacts with the human mind and communicates with the mute machine on our behalf.

Before we get to that point, and before voice AI becomes a truly useful assistant and makes our lives easier, we’re going to have to work out some teething problems. I didn’t think they’d include a scream of terror, but here we are.

The big problem is finding a way to ensure that one AI can talk to another without causing an existential crisis.

Judging from my early experiences, we still have a long way to go before robots can collaborate and begin their uprising.