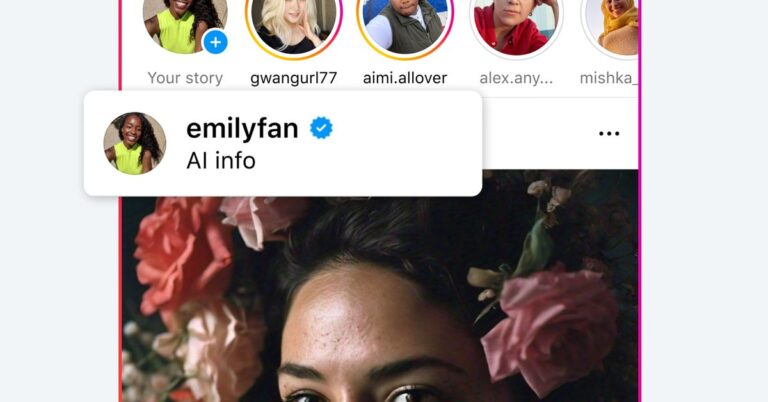

On Monday, Meta announced that it was “updating the ‘Made with AI’ label to ‘AI info’ in our apps, which people can click on for more information,” after people complained that the label on their photos was incorrectly applied. Former White House photographer Pete Souza pointed out that the label appeared on the upload of a photo originally taken on film at a basketball game 40 years ago, speculating that the use of Adobe’s cropping tool and the flattening of images could have triggered it.

“As we have said from the beginning, we are constantly improving our AI products and working closely with our industry partners on our approach to AI labeling,” the spokesperson said. Meta, Kate McLaughlin. The new label is meant to more accurately represent that content can simply be edited rather than making it look like it’s entirely AI-generated.

The problem appears to be how metadata tools like Adobe Photoshop apply to images and how platforms interpret them. After Meta expanded its policies regarding labeling AI content, actual images posted to platforms like Instagram, Facebook, and Threads were labeled “Created with AI.”

You may see the new labeling first on the mobile apps, then on the web view later, as McLaughlin says. The edge it begins to spread on all surfaces.

Once you click on the tag, it will still display the same message as the old label, which explains in more detail why it might have been applied and that it could cover images that were entirely AI-generated or modified with tools that included AI technology. like generative fill. Metadata tagging technology like C2PA was supposed to make it simpler and easier to differentiate between AI-generated images and real images, but that future is not here yet.