After Meta began labeling photos with the “Made with AI” tag in May, photographers complained that the social networking company applied the tags to real photos on which they had used basic editing tools.

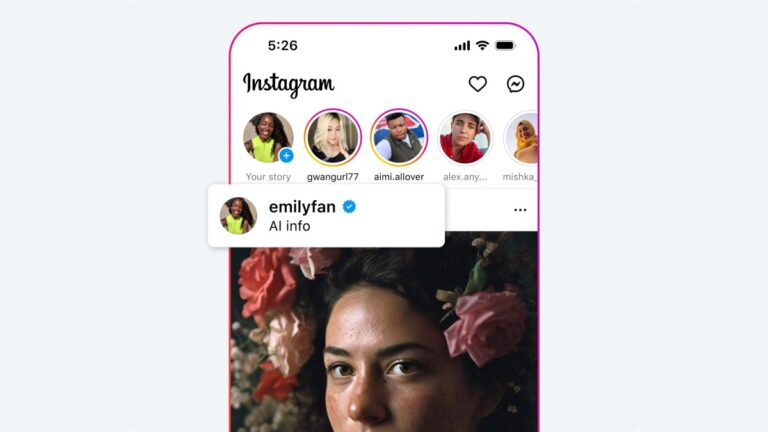

Due to user feedback and general confusion around the level of AI used in a photo, the company is changing the tag to “AI Information” across all Meta apps.

Meta said the earlier version of the tag was not clear enough for users to indicate that the image with the tag is not necessarily created with AI, but could have used AI-based tools. on AI in the editing process.

“Like others in the industry, we found that our labels based on these metrics were not always aligned with user expectations and did not always provide enough context. For example, some content that included minor edits using AI, such as retouching tools, included industry standard flags that were then labeled ‘Created with AI,'” the company said in an updated blog post.

The company is not changing the underlying technology for detecting and labeling AI use in photos. Meta still uses information from technical metadata standards such as C2PA and IPTC, which include information about the use of AI tools.

This means that if photographers use tools like Adobe’s Generative AI Fill to remove objects, their photos can still be tagged with the new label. However, Meta hopes the new label will help people understand that the image with the tag is not always entirely created by AI.

“‘AI Info’ can encompass content that has been created and/or edited with AI, so the hope is that this will become more in line with people’s expectations as we work with companies across the industry to improve the process,” Meta spokesperson Kate McLaughlin told TechCrunch via email.

The new tag still won’t solve the problem of fully AI-generated photos going unnoticed. And it won’t inform users about the degree of AI editing performed on an image.

Meta and other social networks will have to work to establish guidelines without being unfair to photographers who haven’t changed their editing workflows, but the tools they’ve used to edit photos have an element of generative AI. On the other hand, companies like Adobe should warn photographers that when they use a certain tool, their image may be flagged on other services.